Many collective activities performed by social insects result in complex spatiotemporal patterns, which could intuitively be explained by the complexity of each individual. Unfortunately, our knowledge about insects such as ants, bees and termites suggests that they do not possess such sophisticated capabilities individually. Therefore, a more plausible explanation would be the phenomenon termed by biologists as stigmergy.

The main idea behind stigmergy is that an agent changes certain features of the environment as part of its behaviour, which is determined by the very same features. According to Holland stigmergy is essentially a mechanism which allows an environment to structure itself through the activities of agents within the environment.

Stigmergy could be described as self-organisation which often leads to complex emergent behaviours. Understanding the processes of self-organisation would most certainly be beneficial to technology, especially with the advent of distributed and ubiquitous computing.

One of the first attempts to understand stigmergy was conducted by Beckers, who presented a series of experiments where a group of very simple mobile robots gather 81 randomly distributed objects and cluster them into one pile. The coordination of the agents’ movements was achieved through stigmergy.

The project presented in this post simulates the experiments by Beckers and extends the sensing capabilities of the agents in order to achieve shorter task completion times and better efficiency.

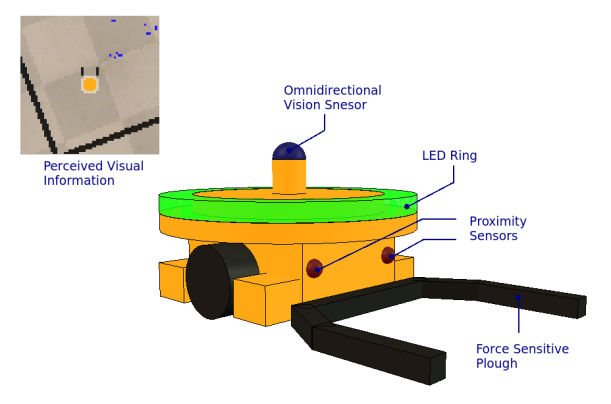

The simulated swarm consists of 10 worker robots as the one in the picture above. Each worker has a differential drive with two passive castor wheels and a force sensitive plough in the front, allowing for the resource cubes to be moved around in the environment. Sensing is performed by the proximity sensors in the front and the omnidirectional vision sensor at the top. Furthermore, every robot has an LED ring used to indicate the current behaviour state.

The behaviour of the workers could be divided into 4 separate sub-behaviours, which are organised in a subsumption architecture. The states are listed and described in a descending priority.

- Turn towards a pile of resources – if the robot is carrying resource cubes and it sees only one pile, which is not smaller than the largest one the worker has seen so far, then it turns towards the pile and flashes its LEDs in red. This state inhibits any other behaviour.

- Leave resources – if the robot carries too many resource cubes, that is the force exerted on the plough is above a certain threshold, then the robot moves back, leaving the resource cubes.

- Avoiding objects – when the robot senses an obstacle it turns so that collision is avoided. If both sensors are active, then the robot turns at a random angle. It is important to notice that the resource cubes are not detected by the proximity sensors.

- Move forward – if the conditions for none of the states with higher priority are met, then the robot simply moves forward.

Beckers used a similar architecture in his experiments, but his robots were not able to turn towards piles of resources. Utilising a simple vision algorithm to detect areas with high density of resources is a novel approach which speeds up the gathering process by extracting more information about the environment. This particular implementation relies on vision, but any other form of environment sensing could be used such as measuring pheromone levels.

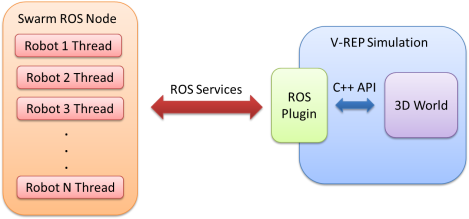

The simulation was implemented using Virtual Robotic Experimentation Platform (V-REP) and Robot Operating System (ROS).

The simulation was implemented using Virtual Robotic Experimentation Platform (V-REP) and Robot Operating System (ROS).

- V-REP is an extremely powerful and yet easy to use simulator designed especially for robotics related simulations. It supports many different APIs and could be extended by adding custom plugins.

- ROS is a distributed pseudo operating system designed especially for robotics applications. It provides the facilities to create different nodes (equivalent to processes) and exchange information between them both synchronously or asynchronously.

Firstly, the model of the robot was created and the environment was set in V-REP. Secondly, a ROS package was developed in C++ which implements a V-REP plugin enabling communication between ROS and V-REP. The plugin provides one service to get the state of a robot and one service to set the motor velocities and the colour of the LED ring of a robot. Thirdly, a ROS package was created which provides the behaviour of each robot. The package spawns a ROS node which runs one thread per robot. Each thread performs the ‘sense-act’ cycle of a robot and determines the current active sub-behaviour as described previously. In order to detect piles of cubes in the visual field of a robot, a simple algorithm, which performs colour thresholding and connected component analysis on the input image, was implemented using the OpenCV C++ API.

If you are interested in the source code or the simulation files for the project just let me know using the contact form here.

If the embedded player does not work for whatever reason follow that link.

The video shows a single run of the 10 robots gathering 100 randomly distributed cubes into a single pile. It is recommended to watch the video in 1080p resolution.

By comparing the simulation results with the ones reported by Beckers many similarities could be found, which suggests that the experiment was implemented and performed correctly.

First of all, the clustering of the cubes could be described in 4 stages:

- Stage 1: Initially, the cubes are randomly distributed in the environment.

- Stage 2: After several minutes, there are many small clusters.

- Stage 3: After 10-20 minutes, the cubes are grouped into several larger clusters.

- Stage 4: Eventually, all cubes are in a single stage.

The last stage takes the longest time as the probability for a robot to bump into a cluster becomes smaller. This process is speeded up by the pile seeking sub-behaviour. Beckers reports that 5 robots gather 80 pucks together in 180 minutes, where the size of the arena is 2.5m x 2.5 m, resulting in robot density of 0.8 robots per square meter. The video shows 10 robots gathering 100 cubes in 94 minutes with arena size of 4m x 4m resulting again in robot density of 0.8 robots per square meter. The pile seeking behaviour has halved the required time to gather all cubes spread in an environment twice larger. That improvement is achieved at the expense of the simplicity of the robot workers.

In conclusion, stigmergy is a powerful mechanism that enables agents which sense and act only locally to achieve complex global behaviour. The efficiency of that behaviour is limited by the complexity of each individual, not by the mechanisms underlying stigmergy.

Reading:

[1] Holland O, Melhuish C., ‘Stigmergy, self-organization, and sorting in collective robotics’, Artificial Life, 1999 Spring,5(2):173-202.

[2] Eric Bonabeau, Guy Theraulaz, Jean-Louls Deneubourg, Serge Aron, Scott Camazine, Self-organization in social insects, Trends in Ecology & Evolution, Volume 12, Issue 5, May 1997, Pages 188-193

[3] Beckers R. Holland O.E. and Deneubourg J.L. , ‘From local actions to global tasks: Stigmergy and collective robotics’, in Brooks R. and Maes P. Artificial Life, 1994, IV, MIT Press

[4] Virtual Robotic Experimentation Platform (V-REP), http://www.coppeliarobotics.com/

[5] Robot Operating System (ROS), http://www.ros.org/

[6] Open Computer Vision Library (OpenCV), http://opencv.willowgarage.com/